Robot-project

Design for imperfect.

Robot-project

Design for Imperfect

Introduction

Since artificial technology these days is still under developed, there are many mistakes an agent will make. How to design for those imperfect agent to make them more smart and sometimes more lovable is a great topic discussed in Human Robot Interaction.

Here some ideas are proposed and a simple protype system is built to test using Arduino and Processing.

This project is based on a workshop with Georgia Institute of Technology.

Concept Design

First the imperfect points the currently robots have are found out.

- The agent still lacks the ability to actively stimulate interaction.

- Robots can hardly answer some questions.

- Most robots are used in single scenario and can not socialize.

- The robots are more of a tool than a real life with secrets.

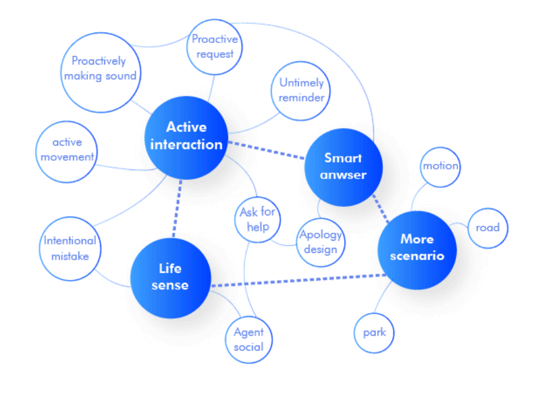

Here we focus on four aspects to improve based on the imperfect points found before: active interaction, life sense, smart answer and more scenario. We propose a new kind of robot which can have social activities with each other to promote human social. Meanwhile it is individual rather than a tool and always ready to question and request the human.

Design Process

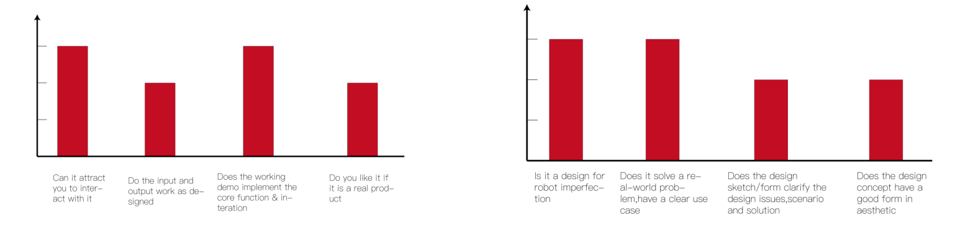

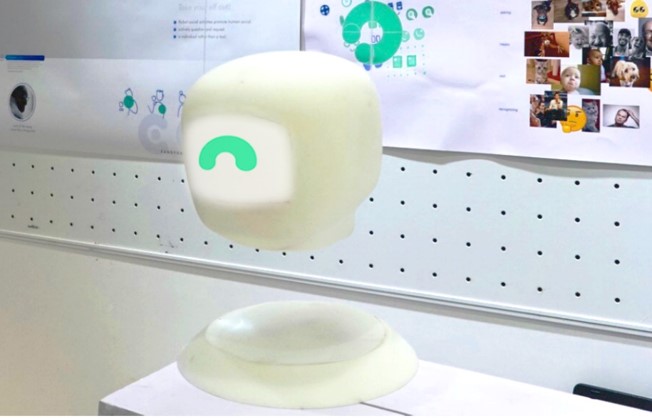

We first present an outlook design and then do a peer testing to improve the design. After few times of such process we finally get a satisfactory outlook.

It combines the idea of active, convenience and unknown. The result of one peer testing is shown below.

The Denied Designs

The Final Design

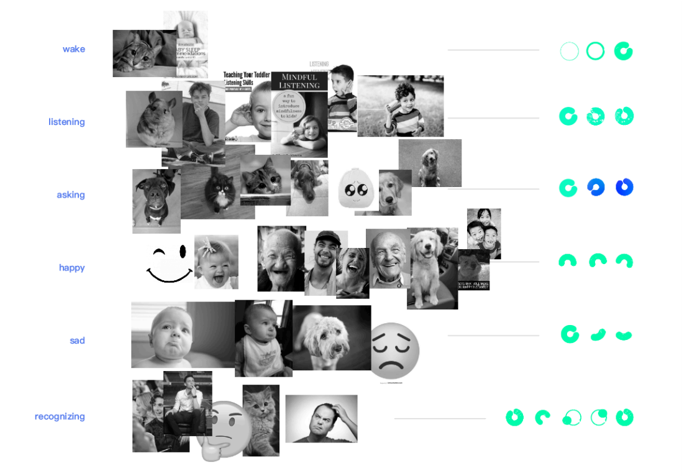

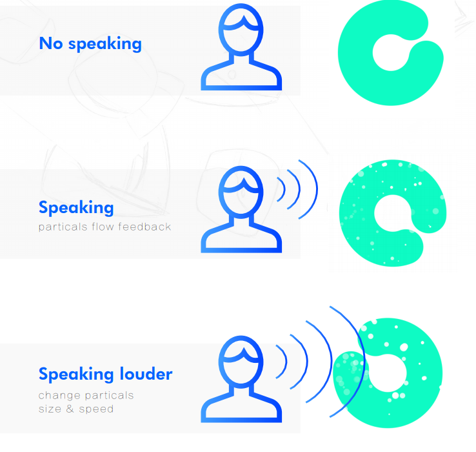

The agent’s interface symbolic expression are designed by analyzing the characteristics of human behavior and expression.

We focus on three key factors summarized before: sociability, actively and independent.

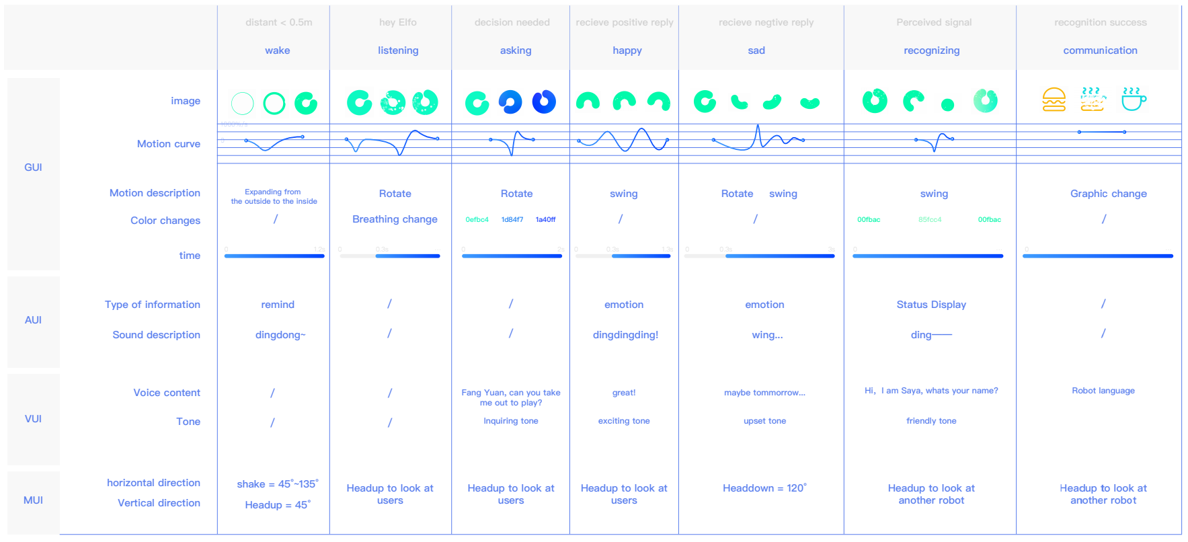

We finally decide the details of each behavior of our robot including GUI (graphics user interface) ,

AUI(audio user interface), VUI(voice user interface) and MUI(movement user interface) and the conditions (both the distance and the commands) to trigger them.

Prototype

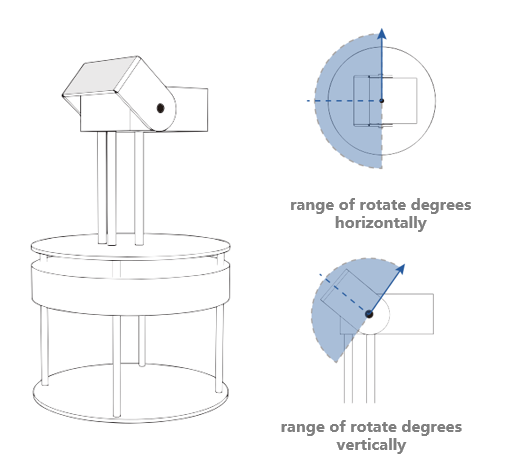

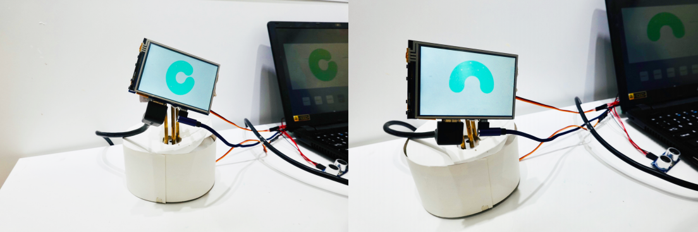

With the help of two steering engines controlled by Arduino, we can simulate the head behaviors of our robot. A little screen is bound on the top of the head which displayed the GUI. We then program in Processing together with Arduino to test the purposed reaction of our robot in different situations. A test platform is built based on Processing to help the test process more easily.

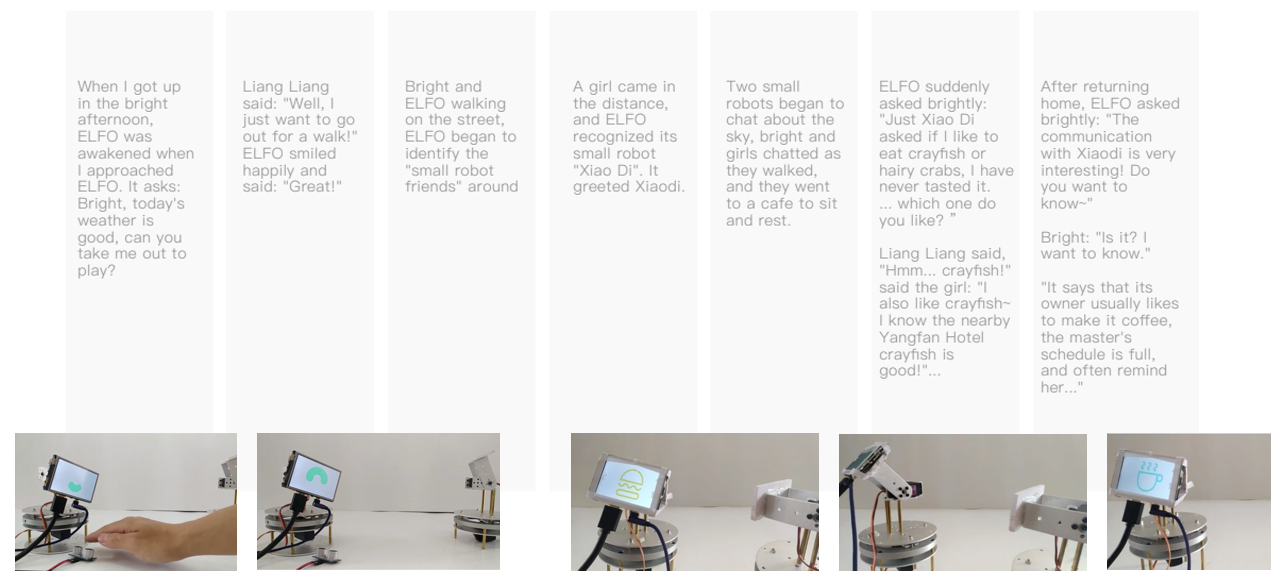

We wrote a story to test the interaction of the robot we designed then.

Finally, we purposed a series of robot’s interactions with its GUI, AUI, VUI and MUI in a specific situation. This is a preliminary probe and more works should be done on other situations.